Understanding 3D Object Detection: Techniques and Applications

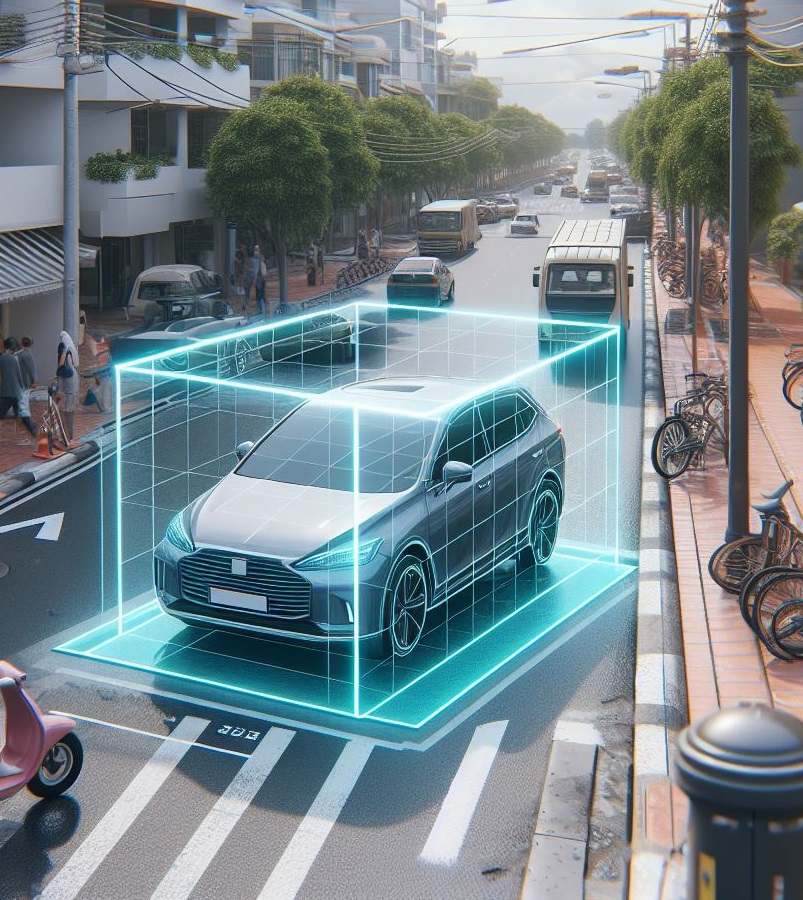

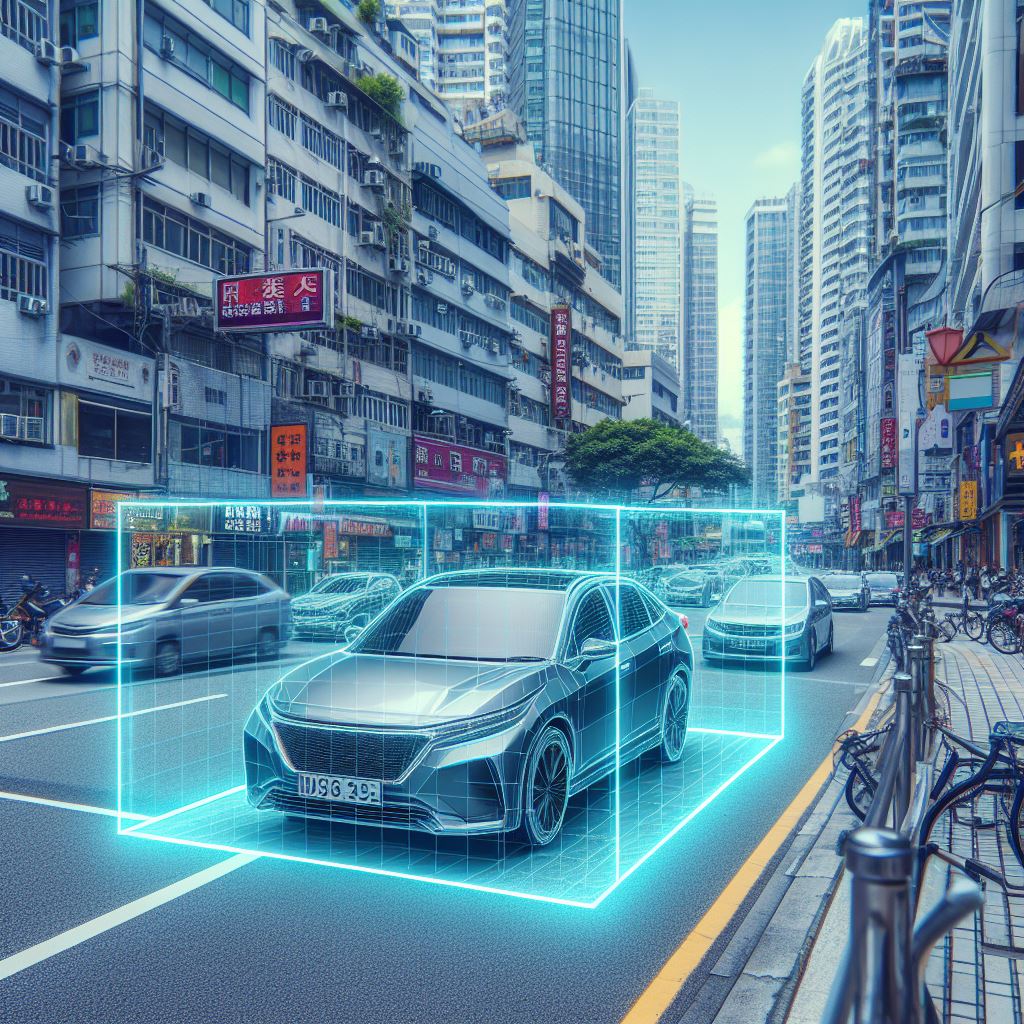

Imagine a box in the real world, not just on a flat picture. That’s a 3D bounding box! It tells us exactly where an object is in space, how big it is (length, width, height), and even which way it’s facing. This is crucial for things like self-driving cars. By using cameras and sensors, the car can create a 3D picture of the world and use bounding boxes to identify objects like other cars and pedestrians. This lets the car know their position and make safe decisions on the road.

Understanding 3D Bounding Box Detection

3D bounding box detection is a crucial task within the realm of computer vision, particularly in the domain of object detection. Unlike its 2D counterpart, which operates solely in a two-dimensional image plane, 3D bounding box detection extends this concept into the third dimension, enabling the localization and classification of objects within a three-dimensional space. This task is essential for a wide range of applications, including autonomous driving, robotics, augmented reality, and urban planning, where accurate perception of the environment is paramount for decision-making and interaction.

Imagine a self-driving car equipped with cameras and LiDAR sensors. The LiDAR data creates a 3D point cloud of the environment, while the camera captures a visual scene. A 3D bounding box detection system analyzes both. Based on the image features and precise depth information, it recognizes a car in front, pinpointing its location (distance and lane position), size (length, width, and height), and orientation (whether it’s coming towards you or parked). This allows the self-driving car to react accordingly, maintaining a safe distance or planning a lane change.

Principles of 3D Bounding Box Detection

At its core, 3D bounding box detection involves two primary steps: object localization and object classification. Object localization refers to the process of precisely determining the position and orientation of objects within the 3D space, while object classification entails assigning semantic labels to the detected objects based on their visual characteristics. These steps are typically performed using sensor data captured from sources such as LiDAR or depth cameras, which provide valuable depth information crucial for accurately reconstructing 3D scenes and detecting objects within them.

Techniques and Approaches for Bounding Box Detection

Accurately pinpointing objects in three dimensions is crucial for various applications, from self-driving cars navigating the real world to robots manipulating objects in their environment. This task, known as 3D bounding box detection, utilizes various techniques and approaches to achieve this goal. Here, we delve into four prominent methods for tackling this challenge:

- Monocular Camera-Based Detection: This approach leverages information from a single image to estimate a 3D bounding box. Deep learning plays a central role here, with convolutional neural networks (CNNs) trained to extract features from the image. These features are then used to predict the 2D bounding box of the object and subsequently estimate its 3D pose (orientation and dimensions) using techniques like direct regression or leveraging geometric constraints. While computationally efficient, this method can struggle with depth ambiguity inherent in single-view images.

- LiDAR-Based Detection: LiDAR (Light Detection and Ranging) sensors directly capture 3D point cloud data, providing rich information about the scene’s depth. Techniques like PointNet++ leverage this data to directly predict 3D bounding boxes around objects. This approach offers high accuracy due to the inherent 3D nature of LiDAR data. However, LiDAR sensors are often more expensive compared to cameras, limiting their widespread adoption.

- Stereo Vision and Multi-View Approaches: Inspired by human binocular vision, stereo cameras capture images from slightly different viewpoints. This allows for depth estimation and the creation of 3D representations like disparity maps. These maps can then be used alongside image data in a CNN framework to predict 3D bounding boxes. Similarly, multi-view approaches utilize images from multiple cameras to enhance depth perception and improve 3D bounding box detection accuracy.

- Fusion-Based Techniques: Combining the strengths of different sensors offers a compelling approach. This involves fusing data from cameras (RGB or grayscale) with LiDAR or stereo vision information. By leveraging the complementary advantages of each sensor (cameras provide rich texture while LiDAR excels in depth), fusion-based techniques can achieve superior performance compared to relying on a single sensor modality.

Applications and Use Cases

3D bounding box detection finds applications across a wide range of domains, including autonomous driving, robotics, augmented reality, and urban planning. In autonomous driving, for example, accurate detection of pedestrians, vehicles, and other objects in the vehicle’s vicinity is crucial for ensuring safe navigation and collision avoidance. Similarly, in robotics, 3D bounding box detection enables robots to perceive and interact with objects in their environment, facilitating tasks such as object manipulation, navigation, and scene understanding.

Future Directions and Advancements

Looking ahead, ongoing research efforts continue to push the boundaries of 3D bounding box detection, with a focus on addressing existing challenges and advancing the state-of-the-art. Future advancements may involve the integration of multimodal sensor data, such as fusing information from cameras, LiDAR, and radar sensors to improve detection performance in diverse environmental conditions. Additionally, efforts to optimize and accelerate deep learning algorithms for deployment on edge devices are underway, aiming to enable real-time 3D object detection in resource-constrained settings.

In conclusion, 3D bounding box detection emerges as a critical component in the realm of computer vision, particularly within the domain of object detection. Its extension into the three-dimensional space revolutionizes various applications, ranging from autonomous driving to robotics and urban planning. By accurately localizing and classifying objects within a 3D environment, this technology enables precise decision-making and interaction in real-world scenarios.

The principles of 3D bounding box detection involve object localization and classification, which leverage sensor data from sources like LiDAR and depth cameras to reconstruct 3D scenes and identify objects within them. Several techniques and approaches, including monocular camera-based detection, LiDAR-based detection, stereo vision, fusion-based techniques, and learning from 2D bounding boxes, contribute to achieving accurate 3D object detection.

Looking towards the future, ongoing research efforts aim to address existing challenges and advance the state-of-the-art in 3D bounding box detection. These efforts include the integration of multimodal sensor data, optimization of deep learning algorithms for real-time deployment on edge devices, and enhancing detection performance in diverse environmental conditions. With continued advancements, 3D bounding box detection is poised to play an increasingly vital role in shaping the future of computer vision and its applications in various industries.

References

Kumar, U., Parihar, P., Vaithiyanathan, D., & Manigandan, M. (2024, January). Real-Time 3D Bounding Box Estimation with RCNN-Resnet101 and Adaptive Projection Matrices. In 2024 Fourth International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT) (pp. 1-5). IEEE.

Yu, Q., Du, H., Liu, C., & Yu, X. (2024). When 3D Bounding-Box Meets SAM: Point Cloud Instance Segmentation with Weak-and-Noisy Supervision. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (pp. 3719-3728).

Ho, C. J., Tai, C. H., Lin, Y. Y., Yang, M. H., & Tsai, Y. H. (2024). Diffusion-SS3D: Diffusion Model for Semi-supervised 3D Object Detection. Advances in Neural Information Processing Systems, 36.

Li, Z., Yang, Z., Gao, Y., Du, Y., Serikawa, S., & Zhang, L. (2024). 6DoF-3D: Efficient and accurate 3D object detection using six degrees-of-freedom for autonomous driving. Expert Systems with Applications, 238, 122319.

Tao, M., Zhao, C., Tang, M., & Wang, J. (2024). Objformer: Boosting 3D object detection via instance-wise interaction. Pattern Recognition, 146, 110061.

Liu, X., Zheng, C., Cheng, K. B., Xue, N., Qi, G. J., & Wu, T. (2023). Monocular 3d object detection with bounding box denoising in 3d by perceiver. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 6436-6446).